Today’s platform release focuses heavily on our edge compute platform features, with added support for a new protocol, the ability to run edge workflows shipped with your hardware, and additional improvements to the development experience.

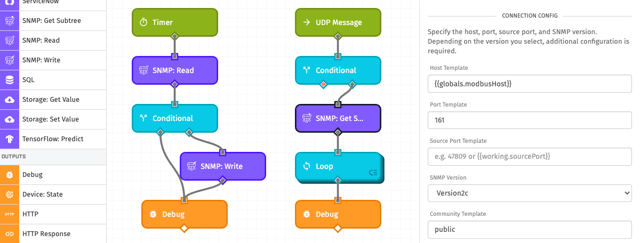

SNMP Edge Workflow Nodes

Simple Network Management Protocol allows for reading and writing characteristics of devices connected on a local network. The protocol is a popular method for interacting with a variety of hardware: IoT sensors, printers, smart appliances, networking equipment, or servers. While network administrators originally shied away from SNMP due to its security concerns, many are now adopting it as a local communication method for the Internet of Things due to its ubiquity across a wide variety of devices and improved security features in later versions.

With today’s release, the Losant Edge Agent can now act as an SNMP manager, initiating requests to other devices on the network using a suite of new workflow nodes:

- SNMP: Get Subtree Node - Reads values from all devices beneath a given root OID.

- SNMP: Read Node - Allows for reading values from one or more OIDs.

- SNMP: Write Node - Allows for writing values to one or more OIDs.

The nodes support all three major versions of SNMP, including the far more secure Version 3 (which supports authentication as well as encryption).

As for SNMP traps, it is usually possible to listen for those using a UDP Trigger listening on port 162, which is the default behavior for SNMP-supported devices to publish events.

Adding support for this protocol opens up Losant’s edge compute functionality to a wide new breadth of device types, and we’re excited to see what our users build with these new workflow nodes.

On-Disk Edge Workflows

Today’s release also includes the ability to execute edge workflows that are stored on the device’s file system directly, as opposed to requiring a deployment from the cloud platform first.

Before this update, it was impossible to run edge workflows without first connecting to the Losant MQTT broker at least one time to receive a set of workflows to run. With the ability to load workflows from disk, this opens up a number of possibilities; for example …

- Edge agents can now take advantage of on-demand device registration, where an on-disk workflow can communicate with an experience endpoint to create a device in your application, generate an access key and secret, and return those to the agent in the endpoint reply.

- Hardware that is part of a product rollout can start functioning, in some capacity, before receiving one or more edge workflows to execute on the device.

- Some users have written application workflows that automatically deploy edge workflows on registration. Those workflows can be removed in favor of including one or more of the edge workflows on disk.

Additional Edge Development Improvements

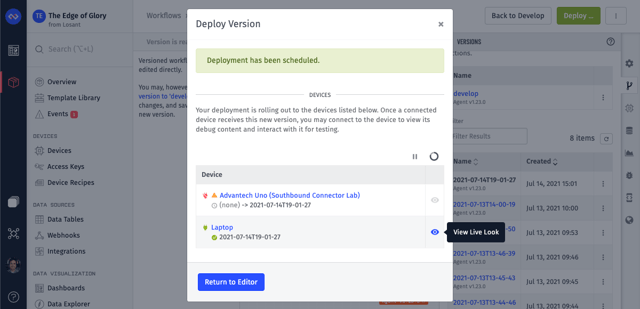

In our last platform update, we introduced a number of changes to the development lifecycle to streamline the process of building, deploying, and debugging edge workflows. With today’s release, we’ve added a couple additional improvements to close the loop on the process.

First, after deploying a new workflow version, you will be greeted with a modal displaying all the devices that are receiving the new deployment as well as their status (whether the deployment has reached the device yet). From here, you can launch the Live Look modal to immediately debug the workflow on your hardware.

Also, it is now possible to bulk-delete workflow versions from the editor’s Versions tab. This will only delete versions that are not currently deployed or are awaiting deployment. We added this feature because active edge workflow development usually results in a large number of workflow versions being created in a short period of time, which can clutter up a given workflow’s versions very quickly.

Other Updates

As always, this release comes with a number of smaller feature improvements, including:

- Edge workflows also get a new Agent Config: Get Node, which allows for reading edge agent configuration values from within a workflow.

- The Losant API Node can now operate on resources in different applications by providing an API token and an application ID. This was a much-requested feature from our users, many of whom have been using an HTTP Node to manually accomplish the cross-application communication.

- The Losant API Node is now also available in edge workflows, though it should be noted that there are significant behavior differences–particularly around the available operations–when using the node on the edge.

- We’ve added some options to Device State Triggers to allow for different behavior when batch-reporting device state. Users can now choose whether to fire the trigger on single reports, batch reports, or both; and in the case of batch reports, the workflow can fire just once with all the batch data, or once for each entry in the batch report.

- We’ve begun rolling out our Instance Manager to our web interface, allowing enterprise customers who make use of the feature to add and manage organizations from the platform UI. If you are a member of a Losant instance, you will find the navigation option in the main navigation.

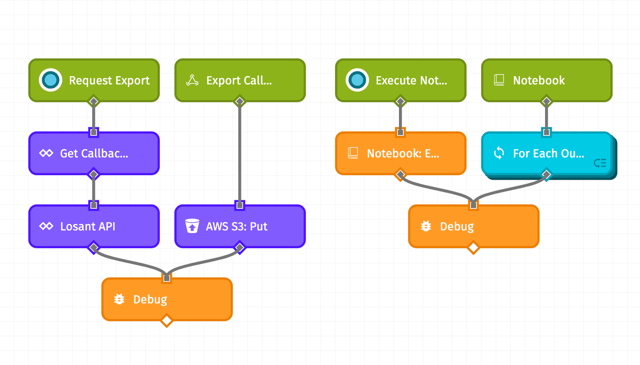

- You may now define ephemeral notebook outputs–as in, the file created within the notebook execution is not required to push to application files or data tables. This comes from a user request for a notebook output that could push directly to an S3 bucket without publishing the contents to a public URL. This can be accomplished in combination with the following additional new feature …

- The AWS S3: Put Node can now accept a URL for the contents to put into the desired bucket. Using a Notebook Execution Trigger, which includes the signed URLs for each of your notebook outputs on its payload, this allows for streaming the outputs directly to your bucket.

- We’ve added a new

evalExpressionHandlebars helper, which allows for rendering the result of an expression evaluated against a context object.

Template Library Update

We’re continuing to grow our Template Library with new entries designed to demonstrate Losant best practices, shorten time to market, and improve end-user experience.

With today’s release, we’ve also added the Stream Exports to AWS S3 template, which demonstrates how to put notebook outputs and data exports directly into an S3 bucket using the product updates described above.

What’s Next?

With every new release, we listen to your feedback. By combining your suggestions with our roadmap, we can continue to improve the platform while maintaining its ease of use. Let us know what you think in the Losant Forums.