Straight out of Kubrick's 2001: A Space Oddessy, Amazon's popular Echo AI device has brought artifical intelligence right into our living rooms. If you have Alexa in your home, you know how convenient it is to get the weather or hear a random joke on command (she's actually pretty funny). Here's a DIY version that's as fun to make as it is to interact with after it's done.

Overview

In this project we will create an Amazon Echo clone based on the Intel Edison hardware and IBM Watson platform. Note that your "Alexa" may not be as fully capable as Amazon's but it will be a whole lot cheaper and a lot more fun to build.

During the project we will covering the following topics:

- Capturing audio with a USB microphone.

- Sending audio to a Bluetooth speaker.

- Using Johnny-Five to interface with the Edison's IO.

- Using IBM's Watson Speech-to-Text and Text-to-Speech services.

Getting Started

What you'll need to complete this project:

- An Intel Edison with Arduino Expansion Board

- USB microphone (I used an Audio-Technica AT2020 USB.)

- Bluetooth speaker (I used an Oontz Angle.)

- An IBM Bluemix Account - Bluemix Registration

- A working knowledge of Node.js

If you haven't already done so, you'll need to setup your Edison and get the latest firmware flashed. You can follow our quick article on Getting Started with the Intel Edison or check out Intel's Getting Started Guide.

NOTE: I'm using the Intel XDK IoT Edition because it makes debugging and uploading code to the board very easy. To learn more about the IDE and how to get started using it check out Getting Started with the Intel XDK IoT Edition. It is not required for this project though.

Connect Bluetooth Speaker

Establish a terminal to your Edison using either of the guides above.

Establish a terminal to your Edison using either of the guides above.

Make your Bluetooth device discoverable. In my case I needed to push the pair button on the back of the speaker.

In the terminal to your board type the following:

root@edison:~# rfkill unblock bluetooth

root@edison:~# bluetoothctl

[bluetooth] scan on

This starts the Bluetooth Manager on the Edison and starts scanning for devices. The results should look something like:

Discovery started

[CHG] Controller 98:4F:EE:06:06:05 Discovering: yes

[NEW] Device A0:E9:DB:08:54:C4 OontZ Angle

Find your device in the list and pair to it.

[bluetooth] pair A0:E9:DB:08:54:C4

In some cases, the device may need to connect as well.

[bluetooth] connect A0:E9:DB:08:54:C4

Exit the Bluetooth Manager.

[bluetooth] quit

Let's verify that your device is recognized in pulse audio:

root@edison:~# pactl list sinks short

If all is good, you should see your device listed as a sink device and the name should start with bluez_sink like the example output below.

0 alsa_output.platform-merr_dpcm_dummy.0.analog-stereo module-alsa-card.c s16le 2ch 48000Hz SUSPENDED

1 alsa_output.0.analog-stereo module-alsa-card.c s16le 2ch 44100Hz SUSPENDED

2 bluez_sink.A0_E9_DB_08_54_C4 module-bluez5-device.c s16le 2ch 44100Hz SUSPENDED

Now let's set our Bluetooth device as the default sink for the pulse audio server:

root@edison:~# pactl set-default-sink bluez_sink.A0_E9_DB_08_54_C4

Connect USB Microphone

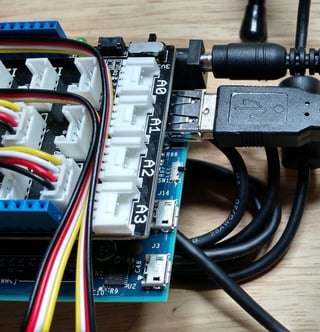

The Edison has two USB modes: host mode and device mode. To use a USB microphone you'll need to switch the Edison into host mode by flipping the microswitch, located between the standard sized USB port and the micro USB port, towards the large USB port. You will also need to power the Edison with an external DC power supply and not through the micro USB.

The Edison has two USB modes: host mode and device mode. To use a USB microphone you'll need to switch the Edison into host mode by flipping the microswitch, located between the standard sized USB port and the micro USB port, towards the large USB port. You will also need to power the Edison with an external DC power supply and not through the micro USB.

Then simply plug your microphone in the large USB port.

Let's make sure the Edison recognizes our microphone as an audio source by using the arecord command.

root@edison:~# arecord -l

The output contains all of the hardware capture devices available. Locate your USB Audio device and make note of its card number and device number. In the example output below my mic is device 0 on card 2.

...

card 2: DSP [Plantronics .Audio 655 DSP], device 0: USB Audio [USB Audio]

Subdevices: 1/1

Subdevice #0: subdevice #0

Let's Get Coding

In less than 200 lines of code (including comments) we'll have a system that will:

- Listen for a button press

- Play a sound to let the user know it's listening

- Capture 5 seconds of audio input

- Convert the audio input to text

- Perform a command or search on the input text

- Convert the text results to speech

- Play the speech audio to the user

I've broken the code up into easy to understand blocks. Let's walk through them and explain along the way.

Requires and Globals

Nothing special here. Just require the modules we need and declare some vars to use a little later.

Initialize Watson Services

Another simple block of code but this one requires a little pre-work. IBM Watson Cloud Services requires credentials for each specific service used. Follow the Obtaining credentials for Watson services guide to get credentials for both the Speech-To-Text and the Text-To-Speech services.

Text-to-Speech and Speech-to-Text Magic

First let's take a look at the Text-to-Speech (TTS) function. There are two parts to TTS: 1) Converting the text to audio and 2) Playing the audio.

For the first, we are obviously using the IBM Watson Cloud Services which couldn't make it any easier. All we need to do is pass the text we would like converted and the audio format we would like back into the synthesize method and it returns a Stream.

For the second, we are using GStreamer. More specifically gst-launch. We take the Stream returned from synthesize and pipe it directly into the stdin on the child process of gst-launch-1.0. GStreamer then processes it as a wav file and sends it to the default audio output.

Next let's look at the Speech-to-Text (STT) function. As with the TTS function, there are two main parts.

The first is capturing the audio. To capture the audio we are using arecord. arecord is fairly straightforward with the exception of the -D option. Earlier when we set up the USB microphone, we used arecord -l to confirm the system saw it. That also gave us the card and device numbers associated with the mic. In my case, the mic is device 0 on card 2. Therefor, the -D option is set to hw:2,0 (hardware device, card 2, device 0.) By not providing a file to record the audio to, we are telling arecord to send all data to its stdout.

Now we take the stdout from arecord and pass that into the recognize method on the STT service as the audio source. The arecord process will run forever unless will kill it. So we set a timeout for five seconds then kill the child process.

Once we get the STT result back, we grab the first transcript from the response, trim it and return it.

Play Local Wav File

We have already covered using GStreamer but to play a local the args are a little different.

Setup Johnny-Five

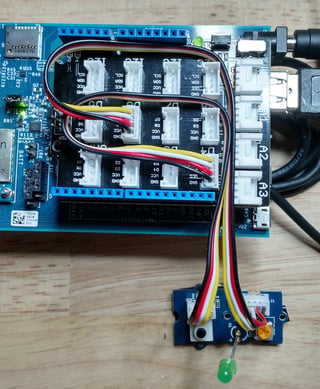

Here we setup the Edison IO in Johnny-Five and listen for the board to complete initialization. Then attach a button to GPIO pin 4 and an LED to GPIO 6. To do this I used a Grove Base Shield along with the Grove button and LED modules. You could attach a button and LED using a breadboard instead.

Here we setup the Edison IO in Johnny-Five and listen for the board to complete initialization. Then attach a button to GPIO pin 4 and an LED to GPIO 6. To do this I used a Grove Base Shield along with the Grove button and LED modules. You could attach a button and LED using a breadboard instead.

Last we add a listener on the button press event which will call the main function that we will look at next.

Main

We now have all the supporting pieces so let's put together the main application flow. When main is run, we first play a chime sound to let the user know we are listening by using the playWav defined earlier. You can download the wav file I used from the projects repo. We then listen for a command, perform the search, and play the results which we will all look at next.

Last we handle any errors that may have happened and get ready to do it all again.

The Bread-and-Butter

The listen function simply turns on the LED to show we are listening then calls stt to capture the command.

The search function uses the Duck Duck Go Instant Answer API to perform the search. Then returns the best answer.

Last we have the speak function that takes the search results and passes that into the tts function.

Wrap Up

Deploy the code to your Edison and run it. Wait a few seconds for the app to initialize then press the button. You'll hear a sound and the LED will light up. Speak your search phrase clearly into the mic then sit back and enjoy your new toy.

You'll find it's great at handling single words and simple phrases. You can also use it to do simple math problems by starting your phrase with "calculate", like "calculate five plus five."

Below you'll find a list of additional resources used while making this project but not linked to above. I encourage you to take a look at them to learn a little more about the technologies used. You can also find all the code for this project at https://github.com/Losant/example-edison-echo.

Enjoy!